Symbian OS Platform Security/05. How to Write Secure Servers

| Reproduced by kind permission of John Wiley & Sons. | Table of Contents |

Contents

What Is a Secure Server?

In this chapter we look in detail at writing server software. Servers demand special attention as they are, in many ways, a cornerstone of the whole platform security architecture, as we described in Chapter 2. A typical server will have one or more capabilities to enable it to perform its functions, and, in the same way as any other application software, it has a duty to moderate and protect its use of these capabilities, so that it is not unduly influenced in its usage of them by other, less trusted processes. However, servers seem to have a dichotomy here, as the very purpose of a server is to provide services to other processes, which will often have no particular trust level. Resolving this apparent dichotomy is a key part of good server design.

Symbian OS Servers

Before diving into the details of secure servers, we should recap the basics of what we mean by a ‘server’.

Symbian OS is built around a micro kernel, with system services such as the file server and communication protocol stack implemented in ‘user space’ processes. Application processes gain access to these services via a robust inter-process communication (IPC) mechanism modeled around a client–server architecture.

The primary purpose of a server is to mediate and arbitrate for multiple clients who share access to a resource. The server code executes within one process, but clients of its services may execute in multiple processes in the system.

As described in Chapter 2, the process is the unit of trust in the platform security architecture. This means that the process boundary is also the trust boundary. As client–server is by far the most commonly used generic IPC mechanism within Symbian OS, it is the most natural point at which to enforce policies regarding this crossing of the trust boundary. The fact that the client–server IPC is designed for robustness, as are the servers themselves to a large extent, is an additional benefit that helps in this task. The kernel is responsible for mediating this communication between client and server, and so must be mutually and universally trusted by these processes to carry out this role in the secure environment.

There are, however, other places where the process and trust boundaries can be crossed. Examples range from the use of shared memory chunks or global semaphores, through to message queues, properties, settings or even the file system itself. Some of these are discussed here or in subsequent chapters, however, client–server is the primary mechanism and we recommend this is what you use as your primary interface to such services. It is what we concentrate on in this chapter.

One exception to the use of client–server is device drivers. The kernel provides the means for user code to communicate with hardware device drivers via channels into the kernel executive. However, both conceptually and in practical terms there are many similarities and equivalencies between a channel to a logical device driver (LDD), and a client–server connection to a server. For this reason, a great many of the principles and procedures presented here are quite relevant to producers of LDDs. (In this context a physical device driver (PDD) is analogous to a plug-in to a server. See Chapter 6 for more on this.)

Writing Symbian OS servers was once considered something of a ‘black art’, however, recently several books, such as Symbian OS C++ for Mobile Phones [Harrison 2003] and Symbian OS Explained [Stichbury 2005], have gone a long way to demystify this process. Rather than repeat what is covered in those volumes we will build upon them, highlighting points where interface methods or development methodology may differ when working in the platform security world.

An Example Secure Server

Before getting started on the process of designing security measures for your servers, it is useful to have a look at how the key concepts work in practice. For this reason, we will walk you through a simple example of securing your first server.

As already mentioned, the role of a server is to mediate access to a shared resource, to enable one or more clients to access that resource, sequentially or concurrently – much in the same way that the server at the front of a school dinner queue mediates access to the pot of stew, and acts as a proxy to accessing it on behalf of each hungry pupil standing in line!

To keep life simple for server developers, whilst also being frugal with system resources, servers are typically implemented within a single thread of execution in a dedicated process, ensuring the server’s exclusive access to the underlying resource being shared.

Multiple clients can then access the server, via the kernel’s IPC mechanism, by establishing a session with the server. The clients can then pass messages to the server to request that certain actions be performed. The messages are delivered to the server in order, and processed by the server as appropriate. The command protocol used in these messages is defined by the server. For this reason, virtually all servers supply a dedicated client-side library (DLL) that encapsulates this protocol, and generally serves to make the application developer’s life easier.

Let’s see what this all means in practice, with this example implementation of a client-side DLL:

class RSimpleServer : public RSessionBase

{

public:

IMPORT_C TInt Connect();

IMPORT_C TInt GetInformation() const;

};

_LIT(KSimpleServerName, "com_symbian_press_testserver1");

static _LIT_SECURITY_POLICY_S0(KSimpleServerPolicy,

0xE1234567); // a test UID used as the server’s SID

EXPORT_C TInt RSimpleServer::Connect()

{

return CreateSession(KSimpleServerName, TVersion(), -1,

EIpcSession_Unsharable, &KSimpleServerPolicy());

}

EXPORT_C TInt RSimpleServer::GetUserInformation(TInt aInfoRequired,

TDes8& aResult) const

{

return SendReceive(ESimpleServFnGetUserInfo, TIpcArgs(aRequest,

&aResult));

}

If you are familiar with client–server development for previous versions of Symbian OS, there should be little surprise here.

The base class for the client side of a client–server connection is RSessionBase; creating a connection to a server is achieved through the call RSessionBase::CreateSession(). The version used here is the new overload added in Symbian OS 9.x, and is of the form:

IMPORT_C TInt CreateSession(const TDesC& aServer, const TVersion& aVersion,

const TInt aAsyncMessageSlots, TIpcSessionType aType,

const TSecurityPolicy* aPolicy=0, TRequestStatus* aStatus=0);

The parameter we are most interested in is the pointer to an object of type TSecurityPolicy. This allows the client code to stipulate criteria for the server to which it will connect. In the example, we state that the server must be running in a process with a SID of 0xE1234567 (a test UID, see Chapter 3 for more information), to guard against server spoofing. You will see other uses of these policy objects throughout this book. Other than this, establishing the session is essentially the same as before.

Having established the session, requests can be sent to the server using RSessionBase::SendReceive(). In the example the GetUserInformation method does exactly this. This has not changed significantly, although small improvements have been made to allow more robust marshalling of arguments. Specifically, you can see the TIpcArgs class being used to provide a typed container for the arguments to the message; TIpcArgs carries flags to allow the kernel to differentiate the various argument types, and thereby ensure that the server respects them correctly. This mechanism is described in more detail in Symbian OS Internals [Sales 2005].

Now let’s see how this message is handled on the server side:

simpleserver.mmp:

TARGET simpleserver.exe TARGETTYPE exe UID 0 0xE1234567 // test UID as UID3 / SID SOURCE simpleserver.cpp

simpleserver.cpp:

class CSimpleServer : public CServer2

{

protected:

CSession2* NewSessionL(const TVersion& aVersion,

const RMessage2& aMessage);

//...

};

static _LIT_SECURITY_POLICY_C1(KSimpleServerConnectPolicy,

ECapabilityReadUserData);

CSession2* CSimpleServer::NewSessionL(const TVersion&

aVersion,const RMessage2& aMessage)

{

if(!KSimpleServerConnectPolicy().CheckPolicy(aMessage,

__PLATSEC_DIAGNOSTIC(“CSimpleServer::NewSessionL

KSimpleServerConnectPolicy”))

User::Leave(KErrPermissionDenied);

// proceed with handling the connect request

}

Here you see a server-side implementation of a security policy test. A simple way to protect an IPC interface is by restricting who can establish sessions with the server. After a connect message has been processed by the kernel, it reappears in user space in the server process, and is handled by the framework code – specifically the CServer2 base class. This then calls the NewSessionL() method that is implemented by the derived concrete class, in our example, CSimpleServer. Here we perform this simple policy check: a security policy object is defined that requires the client to hold the ReadUserData capability. The connect message, as indicated by the RMessage2 parameter to NewSessionL(), is tested against this policy, and if it fails, the method throws a leave exception, with the new error code KErrPermissionDenied.

If the server has a more complex security policy, it may not be appropriate to restrict access to clients at the point that the connection is established, but instead individual IPC operations might be restricted.

class CSimpleSession : public CServer2

{

protected:

void ServiceL(const RMessage2 &aMessage);

void GetUserInfoL(TInt aInfoRequired, TDes8& aResult);

//...

};

static _LIT_SECURITY_POLICY_C1(KSimpleServerUserInfoPolicy,

ECapabilityReadUserData);

CSession2* CSimpleSession::ServiceL (const RMessage2& aMessage)

{

switch(aMessage.Function())

{

case ESimpleServFn1:

// ... handle function

break;

case ESimpleServFnGetUserInfo:

if(!KSimpleServerUserInfoPolicy().CheckPolicy(aMessage,

__PLATSEC_DIAGNOSTIC(“CSimpleSession::ServiceL

KSimpleServerUserInfoPolicy”))

User::Leave(KErrPermissionDenied);

// Process the request as normal

RBuf8 result;

result.CreateLC(aMessage.GetDesLengthL(1));

result.CleanupClosePushL();

GetUserInfoL(aMessage.Int0(), result);

aMessage.WriteL(1, result);

CleanupStack::PopAndDestroy();

break;

default:

User::Leave(KErrNotSupported);

}

aMessage.Complete(KErrNone);

}

Here we see that the server session code performs a very similar security policy check to the last example, but this time it is conditional on the value of aMessage.Function(). Here we are just picking out IPC messages that have a function value of ESimpleServFnGetUserInfo, which corresponds to the call to RSimpleServer::GetUserInformation() in the client library that we started off with. We could also take this down to the next level, the security policy might depend on the value of aInfoRequired that was passed into the client call. This is represented on the server side in the aMessage.Int0() message parameter. An additional level of switch would be required in order to achieve this.

From this quite simple example, we can pick out some important points which we will explore further in the remainder of this chapter:

- Both the client and the server can make use of the platform security architecture to protect their IPC boundaries.

- The client will typically have a simple security policy, and the changes to client-side code are minimal.

- The server can have as simple or as complex a policy as its IPC protocol demands, thus the changes to the server code may be simple or complex.

- A complex server security policy could result in a great deal of repetitive security check code in a standard form. CPolicyServer, described later, is a framework provided to help manage this complexity and minimize copy and paste bugs.

- Security policies are tested at the process boundary: the server does not rely on code in its client library to protect its interface.

- At any point where a trust boundary may be crossed, a check may be required.

Server Threat Modeling

Having now seen a simple example of how a server can protect its interface in practice, let’s take a step back and look at how to design security into a server. As the old adage goes, always design security in, rather than bolt it on as an after-thought.

As we recommend throughout this book, a good place to start is through a threat model.

Step 1 – Identify the Assets you Wish to Protect

In most cases, a server provides a layer of abstraction over a lower level resource, and provides access to this resource for its clients. For example, the file server provides a file system layer over the raw disk device driver interface to the hardware, and arbitrates access to this resource on behalf of all file-using clients.

The primary assets to be protected are the underlying resources and the means by which the server allows direct or indirect access to those resources. The file server must protect the raw disk driver interface in order to avoid physical disk corruption, but it must also protect assets that are derived from this underlying physical resource, the individual files themselves. The file server and the file-system drivers it hosts must work together to ensure that, whatever a client does to one file, the contents of none of the other files are altered. Additionally, in the new platform security data caging architecture (see Chapter 2), clients must only be able to read or write files to which they are permitted access. Finally, the server must ensure it does not compromise one client through the actions of another. For example, a malicious client should not be able to use the file server to write data into another client’s memory space – a duty of care, if you like.

To summarize, the assets for a typical server will consist of:

- the access to any ‘physical’ resources it owns (including its memory, data-caged files, and even its server name)

- any services it provides derived from or built on top of these resources

- the client sessions, which are assets to be protected from any other sessions.

Note that the order here is intended only to aid logical and comprehensive analysis; it is not intended to indicate any prioritization of risk levels.

Step 2 – Identify the Architecture and its Interfaces

As already stated, a server provides a layer of abstraction in a generic system model. It sits on top of lower layer (physical) services, and provides access to higher layer (application) clients. The most obvious interfaces a server exposes are:

- The IPC interface it opens to its client, via the client–server framework.

- The interface to underlying services or resources it needs in order to function.

- Any other interfaces into the server’s process.

A server runs in a normal OS process – there may be occasions where one process would expose several server interfaces (such as ESOCK and ETEL, for instance) but often a server interface will have a dedicated process behind it. All the means of interfacing to a simple application process, as described in Chapter 4, should also be considered here – item numbers 2 and 3 in the above list are really just a reiteration of that. In this sense, a server process is the same as any other process, but with an increased attack surface due to its server interface.

Bear in mind the principle from Chapter 2, that the unit of trust in the platform security architecture is actually the operating system process, not the server. In many cases there is a one-to-one relationship between servers and processes, and this can help simplify one’s reasoning. Note that it is a practical impossibility for one Symbian OS server (that is, the RServer or RServer2 instance) to span multiple processes. However, when analyzing possible interfaces to a server, be aware that other code might execute within the same process as that server. Any interface opened by that code is also a potential interface to your server, so all interfaces into the process must be analyzed. It follows that all server interfaces into the process in question should be considered as a whole.

It may well be that you perform threat model analysis across a number of servers that co-operate to perform some common goal. Here you must look at both the external interfaces to the functional subsystem, and also analyze each internal interface, to ensure no ‘back doors’ into the subsystem are overlooked.

As with all processes, files used by the process should be identified because they form an interface with the process. In particular, do not overlook temporary files and log files, if they might be generated in a production (non-debug) build of your server.

As well as identifying the attack surface, this architectural analysis achieves an additional goal. By identifying the responsibilities of the server in question, and the interfaces that it depends on, the capability set of the server can be determined (if this is not already known).

Step 3 – Identify Threats to the Server

Using the information obtained from the previous steps, specific threats to the server can be identified and documented.

Detailed knowledge of the functionality provided by the server, and the way in which it is presented through its client API, is, naturally, very useful in driving this analysis. If the API is already well established, then working through it item by item, brainstorming on each of them, and on all other identified interfaces, is a methodical approach.

This is a good point to consider the capabilities held by the server. In many cases these might well be more numerous or more sensitive than those held by a typical application process. As such they provide a greater incentive for attack, and require a greater duty of care in identifying and addressing such threats. Threats can be brainstormed on a per-capability basis. For example, a server possessing the ReadUserData capability would need to consider if there is any way it could be manipulated or tricked into unknowingly revealing the user’s private data or leaking confidential information. Processes that do not possess this capability would have fewer threats to consider here. There is a strong practical argument for striving to limit the set of capabilities held by any one server!

Designing Server Security Measures

We will now review how a server can implement countermeasures in order to minimize the risk posed by the threats identified. Measures are targeted to address specific threats. If you find yourself getting involved in developing a complex security policy or mechanism for your server, it is often worth checking what the threats are that the mechanism is addressing, whether the threat justifies the cost, and whether there isn’t a more appropriate simpler measure. Don’t forget hidden costs, such as maintaining the solution and reduced utility to the intended audience if the security policy is impractical.

The countermeasures are split into three broad types:

- platform security architecture – features provided ‘for free’ by the OS architecture

- server design and implementation – aspects of good server development that can work to mitigate security threats

- platform security mechanism – security mechanisms provided by the platform for use by the code, but which the code must be designed to utilize.

Platform Security Architecture

Loader Rules (prevent untrusted code execution in a trusted environment)

The platform security loader’s rules provide a strong level of protection against rogue or malicious code executing within servers and gaining access to server-owned resources. However, this is no protection against well-meaning but bug-infested code within your server!

Process Isolation (prevents tampering with server execution environment)

From its inception, Symbian OS was designed to support a strong model of process isolation, using the processor’s MMU to segregate physical memory address space. Indeed, the client–server architecture itself is a key part of this design, providing a robust means for processes to interact while minimizing the coupling between these processes. However, a number of the original APIs did provide means by which one process could interfere with another. These APIs had mainly been provided for the efficient implementation of specific use-cases on now outdated hardware. One example of this is the IPC v1 client–server APIs – hence the reason these are now superseded by the strongly-typed IPC v2 framework.

The above are generic features, afforded to all processes under the platform security architecture. However, they are worthy of repetition in this chapter on servers, as the server has an implicit responsibility not to undermine these mechanisms, if it wishes to receive the benefit of them! For instance, a server should be particularly careful not to accidentally execute untrusted code, which could be reached via a pointer passed by its client (or indeed any other process). One particularly insidious way that this could happen is by calling virtual functions of C++ objects supplied by the client. If the client were able to tamper with the object, it could change pointers in the vtable to cause arbitrary code to be executed. C++ objects, which may have virtual functions, must not be byte-copied from an IPC message – instead, objects should be externalized and internalized with rigorous bounds checking.

Platform Security Mechanisms

Session Connect Policy Check (detects server name spoofing)

As we illustrated in the example earlier in this chapter, when the client library code connects to a server it can specify a security policy, which the server must satisfy before the connection will be allowed to go ahead. This does not prevent a spoof server from taking the name of your server, but does provide a means through which the client library can detect this.

ProtServ for System Servers

In order to stop spoof servers from taking the names of critical system servers, the name space is partitioned into ‘normal’ and ‘protected’ parts. The protected name space is defined by all server names beginning with the ‘!’ character. Registering server objects that have names beginning with this character with the kernel is only permitted for processes possessing the ProtServ capability, all others will receive an error return code from their call to CServer2::Start(). In this way, only processes trusted with this capability are permitted to provide system services. (As a rule of thumb, a system service can be considered to be one that would cause mobile phone instability if it were to have a fatal error and terminate abnormally.)

A convenient side-effect of this is that all such system servers are going to hold at least one capability – and a system capability at that – meaning that the loader rules prevent such system servers from loading any lesser trusted DLLs (i.e. any DLL lacking the ProtServ capability).

IPC Security Policy Check

This is the most significant new security measure available to servers within the platform security architecture. Servers can specify security policies defining which clients are allowed access to which parts of their IPC interfaces.

As we saw in the opening example, servers can make policy checks against clients at any time that it is appropriate, in order to determine whether a client is authorized to perform a given operation. This check can be against the identity of the client – i.e. against its SID – or, more generally, against the capability set held by the client, or a combination of both. In addition, it is possible for servers to derive their own security framework, built upon these building blocks, should it be necessary.

CPolicyServer Framework

As we touched upon in Section 5.1.2, correctly coding, verifying and maintaining a large or complex set of security policy checks within a server is a potentially error-prone activity. For this reason, the user library provides base-classes to make life easier for the server writer; this framework is called CPolicyServer, and allows for the definition of a static policy table based on the opcode number of the function being invoked over IPC. Once mastered, this can reduce the repetition involved in setting up a server’s security policy. This is an important framework, and we will cover it in more detail in Section 5.4.2.

Data Caging

A server has the use of the private data-cage owned by the process it is running in. Once again assuming a one-to-one process–server relationship, this implies one private data-cage per server.

This is one mechanism through which the server can store non-volatile information it needs for its operation. Storing and sharing data is discussed further in Chapter 7.

There are numerous examples of servers making use of their datacaged area for storing private or confidential files – for example, the messaging server holds messages such as emails and text messages within its data-caged area.

Anonymous Objects and Secure Handle Transfer

The EKA2 kernel provides a powerful mechanism through which handles to kernel objects can be securely passed between processes, to allow secure sharing of the underlying resource. For example, a handle to an RMsgQueue may be passed from a producer process to a consumer process, and no other process will be permitted access to the kernel queue object.

There are two primary means for transferring such handles: at process start up in so-called process environment slots, and over the client–server interface.

Only handles to global objects can be transferred between processes using this mechanism. However, traditionally global objects were always accessible to any process, through an open by name operation. For this reason, unnamed (or anonymous) global objects have been introduced. To create an anonymous global object, KNullDesC should be passed as the name parameter in the appropriate Create() method.

Secure Server Design and Implementation

The following are design and implementation best practices, which, if followed, can also be considered as security measures employed by the server.

Constrain Server Responsibilities and Dependencies

A server that performs many different roles is harder to develop securely, and more of a liability should there be a security vulnerability within it. A server that has run-time dependencies on many other parts of the system, will be more fragile than one that has a constrained set of dependencies.

Architecturally, it is far simpler to consider and validate the behavior of a server that has clearly identified responsibilities, and constrained and identified dependencies, than one that performs many roles with dependency on many disparate subsystems. The simpler the design and validation of a server the better, as there will be fewer opportunities for introducing errors that could result in security vulnerabilities. Conversely, the more services a given server depends on, the more variables there are, and so the more complex the validation of its behavior will be. And the more security sensitive a server is, the more consideration should be given to this issue.

Parameter Validation

Whenever a client passes data to a server, the server must carefully validate the data before acting upon it – just as it must for any data coming in to the mobile phone, via a file or communication socket, for instance. This does not just apply to parameters supplied by the client application to the client library, but to all parameters received via the IPC message. So, even if a particular parameter is only ever provided by the client library itself in normal operation, it must still be validated in the server in case of an inept or malicious application creating its own messages to send to the server.

Particular problems are seen with asynchronous methods, as there is increased potential for the client data to go out of scope (for example, if a function with locally-scoped data terminates) between it being referenced in the message and the message being processed by the server. This is true even if the server runs at a higher priority than the client, as it may be blocked on some other operation at the moment the client thread issues the request. If a careless client releases and reuses the parameter’s memory space before the server has processed the request, then it’s probable that the server will receive an error in its attempt to read, write, or validate the data. A multi-threaded client, or one using shared memory, may exhibit similar bugs on synchronous calls too. One thread may modify the parameter’s memory contents or allocation state while the client thread is blocked, even on a synchronous request.

To combat this, the server must expect to handle errors arising during client memory access, i.e. the Read(), Write() and GetDesLength() members of RMessagePtr2; wherever possible, use the leaving overloads. Useful additions to particularly note are the new RMessagePtr2::GetDesLengthL() leaving overloads – these allow the server to safely discover the length of a client buffer, and have the standard exception framework take the burden on handling a descriptor error, without having to remember to manually check for negative error results, as is the case with the older non-leaving version.

Also, a server should never accept or use a pointer received over IPC. We have already noted that you must never call code via a pointer received in such a way, but security problems can also result from data that is pointed to being changed in unexpected ways or at unexpected times. In particular watch out for global or shared chunks – using them in a useful yet secure fashion is tricky enough not to be worth the effort except in the most bandwidth-critical applications.

Robust Error-handling Framework

Continuing from the previous point, the server-side error-handling framework should not be overlooked, as it is a very significant part of the security design of the server interface. The server error framework is built upon the standard CActive implementation of the leave/trap Symbian OS primitives. Unlike the IPC v1 CServer class, both CServer2 and CPolicyServer provide an implementation of the CActive::RunError() interface, and, if relevant, they pass the trapped error onto the specific CSession2 instance that was handling execution at the point the leave was encountered, providing it with the message that was being processed at that time via the CSession2::ServiceError() method.

This means that concrete implementations of CSession2 are able and encouraged to make maximum use of the leave framework. If required, the concrete session class can override ServiceError() with a custom implementation, although the default implementation, which simply completes the message with the leave code that was thrown, will suffice in many instances.

This means that if, during the initial (synchronous) processing of a client request, any error occurs – for example, low memory or disk resources, an invalid parameter in a deeply nested structure provided by the client, or a security policy failure – the same error handling framework will tidy up any partially allocated resources and complete the request, signaling the error condition back to the client.

Robust API Design

Getting the API design right can greatly ease the design and implementation of the security policy for that API. Here are a few points to consider:

- Functions with a specific purpose are easier to provide a policy for than multipurpose, generic or ambiguous methods, where the context must be taken into consideration in order to decide on the appropriate policy. For example, contrast RDisk::Format() with RDisk::PerformAdministrativeOperation(TOperation) or RDisk::Extension(TExtId).

- Having a specific purpose also helps the user of the API to create secure code, as it is clearer what the consequences of calling the method might be.

- The primary outcome of a security policy failure in an API is an error code result, for example, KErrPermissionDenied. The error modes of APIs should be considered in general.

- Error codes should be returned to the client application at every point where an error can legitimately arise, but not in a place where the client would not be able to handle the error (such as when canceling or closing down). Generally the error should be indicated at the point at which failure has become inevitable, but no sooner.

Server Name

We saw how a client can use a security policy to ensure they only connect to the intended server. However, it is useful to ensure that the server chooses a sensible name in the first place. Longer names reduce the chance of conflict, and, as you can see in the opening example of this chapter, using the unique part of a DNS name owned by yourself can further improve matters.

It is also worth pointing out that the server name is quite distinct from the process name. Both are stored by the kernel, the server name in a DServer object, the process name in a DProcess object. As already mentioned, one process can have many servers running in it. By default the process name is equal to the name of the EXE that was used to launch it, however, a process can rename itself to any name it wishes, as long as the new name is unique at that time. The only real value in a process name is for debugging and diagnostics. Specifically, no security check should be made based on a process’s name – use the SID instead – and it should not be displayed to the user, as it is meaningless at best and potentially downright misleading at worst.

Determining the Security Policy

We now turn to one of the most common questions in the design of secured APIs: what capability should I use to protect access to my sensitive resources?

Here are the questions you should ask yourself in order to determine the answer:

Does it need protection at all?

Use threat analysis to drive this.

Is this the right point to make a policy check?

The aim is to make the security policy test at the point where the client has requested a security sensitive operation, and repeat the check on every such operation. Making a policy check early, and caching the results for future use, can lead to so-called ‘TOCTOU’ (time of check; time of use) errors in the code. Do not rely on the client following a preferred order of function calls to the server to implement the correct policy – every security-sensitive action should consider its own policy check requirements. The one exception to this rule is where every operation on a server has the same security policy requirements. In this case, the policy check can be made once at session establishment, and need not be repeated on each subsequent operation in that session.

Another point to remember is that security policy checks against a client must only be made within the context of the server, and not within the client-side code. You cannot trust the client not to skip over or corrupt such a client-side check – this is why we refer to the process boundary as the trust boundary.

Can the API be made secure without restricting it with a capability or caller identity?

Careful API design can often reduce the threat presented by an API. For example, by designing fair brokering of access to a shared resource such as display screen or speaker, a server might let all clients have some degree of access regardless of capability. When detection and response are possible this may be preferable to prevention – for example, if the user can detect undesired sound being output, and can respond by muting the device, sound output can be left unrestricted.

What aspects of the API need restricting?

Arrange the API so that sensitive operations are separated from non-sensitive ones – for example, under different methods and server opcodes – where possible.

Do you know the identity of a single process that is, architecturally, the only client of this method?

If so, its SID may be checked instead of a capability.

Is it acceptable to use a list of known clients as the policy?

Generally this is undesirable – the capability model was created specifically to avoid the need to manage large access control lists – but in some application domains it may be acceptable.

What is the asset and what is the threat to it, which you are protecting against?

Check if there is an existing system API that is sufficiently similar to have set a precedent for how this asset is to be protected.

Is there an external or industry policy or requirement about how this asset must be protected?

There may be some regulatory or commercial circumstances that require separate cryptographic or other mechanisms to be employed to verify the authenticity or permissibility of the requester or the request.

Does this method layer over some other API?

Consider whether the new API fully exposes the lower-level API (and if so, why this duplication?) or if it does so in a limited form. Consider also whether the policy on the lower-level API is appropriate for this higher level method, and whether the threat is reduced by using this method. For example, the Symbian OS Bluetooth stack enforces LocalServices at its client API, even though it is revealing functionality that is implemented over APIs protected with the CommDD capability. On the other hand, a CSY offering direct serial port access to the Bluetooth hardware would duplicate the device driver policy of requiring CommDD. One particular aspect to consider here is whether your server might be ‘leaking’ access to the underlying sensitive API. All processes holding capabilities have a duty to mediate their use of them on behalf of other processes, and not leak access in this way.

What would be the impact of not protecting this method?

Possible consequences could be loss of users’ confidential data, unauthorized access to the network, unauthorized phone reconfiguration, and so on. This can give an initial pointer as to which capability should apply.

Will the client application be able to get the necessary capabilities?

Once you have identified a proposed capability (or set of capabilities) under which the API could be protected, you should carefully consider whether this capability is realistically going to be available to the target audience of the API. As a rough indicator, the division of user and system capabilities can give some idea, however more detailed information can be sought from the signing authority, responsible for allocating capabilities for applications on the mobile phone – for Symbian Signed the common criteria are covered in Chapter 9. If the capability is not available, then rethinking of the API might be required, or you might have hit upon an intractable incompatibility between the desired access to a resource and the risk in providing that access.

What to Do on Security Policy Failures

When you reject a client request, it is generally recommended that you do so by completing the relevant message with an appropriate error code. KErrPermissionDenied should only be used in the case where a policy check has failed, as it gives a clear indication to the application developer or user that it is a security policy failure, rather than simply an invalid argument or resource error.

Another option is to modify the behavior of the method in a minor way, but let the request proceed. This might be useful for maintaining compatibility, but it can introduce problems for the application developer in the future. If, at some future point, the circumstances change and the request is now able to pass that policy check (for example, the client acquires additional capability for an unrelated reason), then suddenly the debugged and working client will experience the alternative behavior having unintentional and potentially insecure results.

Server Implementation Considerations

Client-side Considerations

Many servers provide a client-side library to encapsulate the client–server protocol and expose the API as a set of exported methods. There are two significant things to bear in mind, if you use this architecture:

- As this code is running within the client process, it is futile to perform any security checks as they can easily be defeated.

- As this code is running within the client process, it must be sufficiently trusted to be loaded by that process.

Statement 1 is a re-iteration of what we’ve seen in the previous sections – security policies should only be checked at the point where a process boundary is crossed. Within the client library, no boundary has been crossed, so a security check is unnecessary and ineffective.

We need to consider statement 2 a little further. This asserts that the client needs to trust your code in order to use it. This is to avoid the client process being tricked into doing something unintentional through the use of untrusted code.

If the client library is distributed as a binary DLL, as most are, then the loader’s capability rules, as described in Chapter 2, will enforce this trust dependency – the client library must have at least the capability set of each client trying to load it, in order to be loaded by that client. For a general-purpose server, intended for use by a wide range of clients, this means that the client library must have a wide set of capabilities. For this reason, the client libraries on most Symbian provided servers are assigned all capabilities except Tcb. This is accepted as a trade-off between maximizing the utility of the server and constraining the code base trusted to run within the most sensitive part of the system. It is envisaged that any general-purpose third-party server would follow a similar approach, but choosing a set of capabilities for the client library in accordance with the capability set the application is targeted at.

What if the client library cannot be provided with the full set of capabilities that clients might possess? This is, in effect, an architectural ‘early warning’ indicator for the developer of the application wishing to use the less-trusted library. It means that the application is dependent on a service that is less trusted, and perhaps lower quality, than itself.

However, in some circumstances it may still be necessary to keep to this architecture. In this case, the server’s client library could be supplied in either source or static library (LIB) format to the application developer, to be included directly within their own binary. This moves the burden of responsibility for the code to the application developers, who must satisfy themselves that they trust the source and intent of the code at application build time (as opposed to security being enforced at run-time), just as they must be responsible for all instructions in their compiled binary.

Remember that if the client code is distributed in this way, then the IPC protocol is not encapsulated within a binary interface but instead is a public interface. This has consequences for compatibility reasons – for example, any changes to the IPC function numbers or server name might break compatibility with existing clients.

The other important considerations for the client library were illustrated in the opening example. To recap:

- The RSessionBase::SendReceive() methods should be recoded in IPC v2 format – that is, using TIpcArgs in place of TAny* parameters.

- When creating a session to the server, it is wise to add a policy check that ensures that the server is running with the expected SID.

- For servers that operate in the ProtServ domain, the name will need to be changed to start with ‘!’. Only servers which are system critical – without which the system cannot operate – need to implement this, which is, by definition, rare for an after-market application.

A final point to consider is the documentation for the client interface – this is most importance where the client interface is to be shared with others. As a rule security objectives are met when observed behavior meets expected behavior. Clear client interface documentation is an important means through which those expectations can be established.

Server Considerations

The recommended way of adding security policy checks into a server is to use the CPolicyServer framework. This involves deriving the server’s main class from the CPolicyServer base class, instead of CServer or CServer2.

If you are migrating a server to this framework, you will see that the following changes need to be made:

- On construction the CPolicyServer requires a parameter of type CPolicyServer::TPolicy to be supplied to it.

- Two virtual methods, CPolicyServer::CustomSecurityCheckL() and CPolicyServer::SecurityCheckFailedL() may need to be overridden, if referenced by the TPolicy table provided in the constructor.

Policy Tables

The policy table is designed to allow a very compact representation of large or complex server policies, and to allow fast lookups on that data. In addition, it is designed to allow the table to reside in constant static data, which not only saves on construction time, but also makes it far less likely to be tampered with, as the memory page holding it will usually be marked read-only.

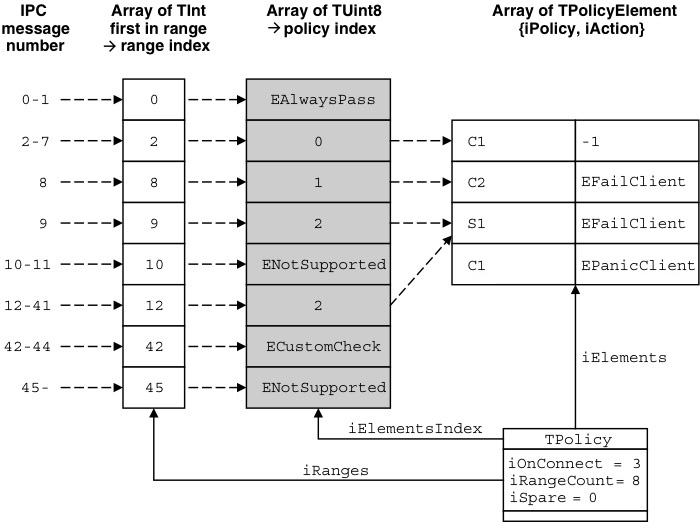

Alas, all these good features come with a slight penalty of legibility. A diagram (see Figure 5.1) is useful for understanding how the TPolicy table works.

| Figure 5.1 TPolicy Structure |

Here we see an example TPolicy instance, with the three array members – iRanges, iElementsIndex, and iElements – expanded.

The elements of the first array, iRanges, correspond to IPC function numbers. When a message is received in a session the value of RMessage2::Function() is searched for in this array, in order to determine what action the policy server framework will apply to the message – based on the index of the IPC function in the array. This is called the range index. For example, an IPC message with function value 8 will cause the value 8 to be searched for in this first array. Here the 3rd index contains the value 8, but as indexes are counted starting at 0 this yields a range index of 2. If the exact value is not in the array, then the closest value below it is taken. So if IPC function 15 arrives at this table, then a range index of 5 is chosen, as this is the index at which the nearest lower value to 15 – i.e. 12 – is held. To support this the array must be held in sorted numerical order and this also conveniently enables a fast search to be performed. Also note that the table must start with a 0 element – negative entries are not allowed (negative IPC functions are reserved for use by the server framework itself).

The range index is used as an index into the second array, iElementsIndex, to determine the policy index. So IPC function 9 corresponds to range index 3, which corresponds to a policy index of 2.

Following the same pattern, the policy index is then used as the index into third and final array, iElements. This yields the TPolicyElement that should be applied to this particular IPC function. Each object of this type contains both the security policy that should be applied (encoded in the iPolicy member as a TSecurityPolicy, seen in our opening example) and the failure action to take in the case where the policy check fails (encoded in the iAction member).

Using the example of IPC function 8, with policy index of 1, we see the policy that will be applied is labeled C2 – this represents an instance of a security policy demanding that the client hold two specific capabilities. In our example code this is initialized with the _INIT_SECURITY_POLICY_C2 macro. If a client invoking this IPC function does not hold both specified capabilities, then the failure action EFailClient (from the CPolicyServer::TFailureAction enumeration) indicates that the message must be completed with the KErrPermissionDenied error code.

This policy table is described in code as follows:

const TUint myRangeCount = 8;

const TInt myRanges[myRangeCount] =

{

0, //range is 0-1 inclusive

2, //range is 2-7 inclusive

8, //range is 8 only

9, //range is 9 only

10, //range is 10-11 inclusive

12, //range is 12-41 inclusive

42, //range is 42-44 inclusive

45, //range is 45-KMaxTInt inclusive

};

const TUint8 myElementsIndex[myRangeCount] =

{

CPolicyServer::EAlwaysPass, //IPC 0 -

0, //IPC 2 -

1, //IPC 8 -

2, //IPC 9 -

CPolicyServer::ENotSupported, //IPC 10 -

2, //IPC 12 -

CPolicyServer::ECustomCheck, //IPC 42 -

CPolicyServer::ENotSupported, //IPC 45 - KMaxTInt

};

const CPolicyServer::TPolicyElement myElements[] =

{

{_INIT_SECURITY_POLICY_C1(KMyCap1), -1}, //IPC 2 - 7

{_INIT_SECURITY_POLICY_C2(KMyCap2A, KMyCap2B),

CPolicyServer::EFailClient}, //IPC 8

{_INIT_SECURITY_POLICY_S1(KMySID, KMyCap3),

CPolicyServer::EFailClient}, //IPC 9, 12-41

{_INIT_SECURITY_POLICY_C1(KMyConnectCap),

CPolicyServer::EPanicClient}, //Connect

}

const CPolicyServer::TPolicy myPolicy =

{

3, // Connect messages use policy index 3

myRangeCount,

myRanges,

myElementsIndex,

myElements,

}

If you get confused following these steps, it is useful to remember that the IPC number goes through a reverse lookup in iRanges, the result of which (the range index) goes through a forward lookup in iElementsIndex, and the result of that (the policy index) goes through a forward lookup in iElements.

When a policy check fails, i.e. whenever the CPolicyServer::EFailClient result is encountered, diagnostics can be generated in the emulator to aid debugging, as already mentioned in Chapter 3. Here’s an example:

*PlatSec* ERROR - Capability check failed - A Message (function number=0x000000cf) from Thread helloworld[10008ace]0001::HelloWorld, sent to Server !CntLockServer, was checked by Thread CNTSRV.EXE[10003a73]0001::!CntLockServer and was found to be missing the capabilities: WriteUserData . Additional diagnostic message: Checked by CPolicyServer::RunL

In this example diagnostic you can see:

- the message function number: 0x000000cf

- the client process and thread that sent the request: helloworld [10008ace]0001::HelloWorld

- the name of the server that received the request: !CntLockServer

- the name of the process and thread in which the server is hosted: CNTSRV.EXE[10003a73]0001::!CntLockServer (note the exclamation mark on the thread name here is coincidental, and not enforced by the ProtServ capability)

- the reason that the TSecurityPolicy check failed: a lack of WriteUserData

- the additional diagnostic information, in this case indicating that the security policy check was made from within the CPolicyServer::RunL framework function.

Special Cases

There are some special cases to consider in the framework. Firstly, connect messages do not go through the first two array lookups, as connect messages do not have normal IPC function numbers. Instead, the policy index for a connect request is taken directly from the iOnConnect member of TPolicy itself. This is then looked up in the third array as normal.

Secondly, if any policy index found by lookup in the iElementsIndex array or from iOnConnect is a value from the CPolicyServer::TSpecialCase enumeration, then no policy element lookup occurs, but instead the policy is inferred as follows:

- CPolicyServer::EAlwaysPass – the IPC function is allowed to go ahead with no specific policy check against the client; that is, any client that can establish a session may call this method

- CPolicyServer::ENotSupported – the IPC message processing is completed immediately with KErrNotSupported. This should be used as the final element in iElementsIndex, and also to fill any other ‘holes’ in the IPC function space – for example, where deprecated functions have been removed, or gaps left for compatibility reasons. If any of these opcodes are used by new API methods in the future, the policy table must be considered and updated to allow the policy check to pass – for this reason having a default position of CPolicyServer::ENotSupported is much safer than CPolicyServer::EAlwaysPass. Note there is no CPolicyServer::EAlwaysFail enumeration – you should instead use CPolicyServer::ENotSupported for any opcode that must always fail.

- CPolicyServer::ECustomCheck – the security policy is not based on IPC function alone; run-time consideration is required to determine the policy to apply. A call to CPolicyServer::CustomSecurityCheckL(), discussed below, will be made in response to this.

Finally, if the failure action – identified by the iAction member of CPolicyServer::TPolicyElement – is negative then it means special failure processing should be performed instead of just returning a simple error code to the client (the recommended approach) or a client panic. A call to CPolicyServer::CustomFailureActionL() will be made to allow this to occur.

Custom Checks and Failure Actions

If the policy index equals CPolicyServer::ECustomCheck then CustomSecurityCheckL() is called. If a failure action is negative, the CustomFailureActionL() is called. Potentially one IPC message could result in calls to both these methods.

TCustomResult CustomSecurityCheckL(const RMessage2 &aMsg,

TInt &aFailureAction, TSecurityInfo &aMissing);

You must override this method if ECustomCheck appears in your policy table, as otherwise the base class method will be called which will result in a server panic.

In this method, you can inspect the contents of the RMessage2 received – passed as the first parameter – in order to determine the correct policy to apply. Use this whenever the policy is based on something other than the IPC function number alone, such as the parameters being passed into the method or the current state of the session or server objects. While you are free to form this code as you wish, we recommend you structure this as two distinct stages:

- Determine the TSecurityPolicy object to apply to this request, based on the state of the server, session, or subsession, and the parameters passed in RMessage2, and

- Test the message against the policy so determined.

A generalized implementation might look something like this:

CPolicyServer::TCustomResult

CMyPolicyServer::CustomSecurityCheckL (const RMessage2 & aMsg,

TInt & aFailureAction, TSecurityInfo & aMissing)

{

TSecurityPolicy policy;

DeterminePolicyL(aMsg, policy);

if(policy.CheckPolicy(aMsg, aMissing,

__PLATSEC_DIAGNOSTIC(“example custom check”))

return EPass;

else

return EFail;

}

DeterminePolicyL() can be as simple or complex as required.

Using this structure encourages a more rigorous approach to determining the policy, rather than ad-hoc layers of logic and counter-logic being tested against the message. It also aids debugging, as there is a single place to inspect the policy being applied, in order to determine why it is failing or passing when it shouldn’t. Note that using the TSecurityPolicy class applied against the RMessage object received as shown here is strongly recommended as it maximizes the amount of diagnostics automatically generated by the server framework.

The aFailureAction and aMissing members passed into this method are primarily of use if you are implementing custom failure actions:

TCustomResult CustomFailureActionL(const RMessage2 &aMsg,

TInt aFailureAction, const TSecurityInfo &aMissing);

The CustomFailureActionL method is called by the framework whenever a negative failure action is encountered in processing a security check – either in the iAction of the resolved TPolicyElement for the message, or returned in the second parameter to CustomSecurityCheckL().

It is primarily intended to allow servers to handle security policy failures in a central place. This handling may be for debug logging or tracing, or security audit purposes, or to aid step-through debugging. It may also be used to apply a final override to the outcome of the security check. Such an override is primarily useful for debugging purposes – but do ensure that any such debug-disable is not present in release builds! Also in some limited circumstances this method might be useful for offering the user an opportunity to override the policy failure in a controlled fashion, at run time.

The actual implementation of CustomFailureActionL() follows much the same pattern as CustomSecurityCheckL() above, so much of the advice expressed there holds here too.

The result of both of these custom methods is indicated in the same form: they should either return one of the three enumerations from CPolicyServer::TCustomResult, or leave with an appropriate error code. A leave will be propagated back to the client in the form of a standard error completion code. The custom result codes are used as follows:

- CPolicyServer::EPass – The message is processed as normal; either by passing it to the ServiceL() method of a session or, in the case of a connection message, by creating a new session.

- CPolicyServer::EFail – The message is considered to have failed its policy check; the aFailureAction parameter is used to determine what to do next (on entry this parameter is initialized to EFailClient, the common case).

- CPolicyServer::EAsync – The derived class is responsible for further processing of the message; the policy server framework will do nothing more with it.

This last case, EASync, deserves a little more discussion. If either of the custom methods returns this value, it effectively removes the corresponding message object from the policy server framework’s control. It is then up to the specific server implementation to handle the message as and when it sees fit, in the same way as any other message within the ‘normal’ ServiceL() processing path of the server. That is, the same error-handling rules apply here as to any other message that might be completed asynchronously within the server. Most often this method is used where the policy to be applied requires an asynchronous operation to be performed before it can be determined – this might be fetching some data from another server, prompting the user, or whatever. Once this has been done, you can insert the message back into the policy server to continue processing. This is achieved by calling CPolicyServer::ProcessL() in the case of a policy pass, or CPolicyServer::CheckFailedL() in the case of a policy check fail. You can also complete the message directly, for example, through RMessagePtr2::Complete(KErrPermissionDenied), however this will skip the opportunity for any further custom failure action to be performed on the message.

Summary

In this chapter we have seen how to go about designing security into your client–server implementation. The simple example at the start gave a taste of the new machinery that is available within the context of a Symbian OS server, as you set about protecting it. It also demonstrated some of the fundamental principles of the security architecture:

- Security checks should only be made when a process boundary (that is, a trust boundary) is crossed.

- Where servers abstract complex underlying resources they may also require a complex security policy for access to those resources, whilst clients typically have a simple connection policy.

- The kernel is the trusted intermediary between client and server, and both client and server rely on it for the implementation of their security policies.

The rest of the chapter then concentrated on the ways in which you can use these basic mechanisms to maximum benefit. First we looked at the threats that are specific to a server, and what you should consider when analyzing the threat model of a server. Next, we saw the various security measures available within the operating system, frameworks that are available for use within servers, and how to design usage of these into your server. Finally, we went though some notes on implementing servers, looking in particular detail at the policy server framework and how to use it.